First published on Optimizely.com

Traditional Landing Page Optimization (LPO) tips don’t work anymore. Why? Because your visitors are individuals with unique preferences, behaviors, and pain points. See how landing page optimization is changing.

So, you want to optimize a landing page for lead generation…

Back in the day, adding a catchy headline and a flashy CTA button on your landing page was enough to make it rain leads. However, those days are as dead as MySpace.

Because traditional Landing Page Optimization (LPO) tips don’t work anymore. Your visitors aren’t just nameless, faceless clicks anymore. They’re individuals with unique preferences, behaviors, and pain points. And they expect you to know it.

Enter personalization – it’s turning landing pages from generic billboards into dynamic, revenue-generating machines. In this blog, see how landing page optimization is changing and also some of the best landing page optimization examples.

LPO and personalization: A significant shift in consumer behavior

Landing page optimization isn’t dead. It’s evolved.

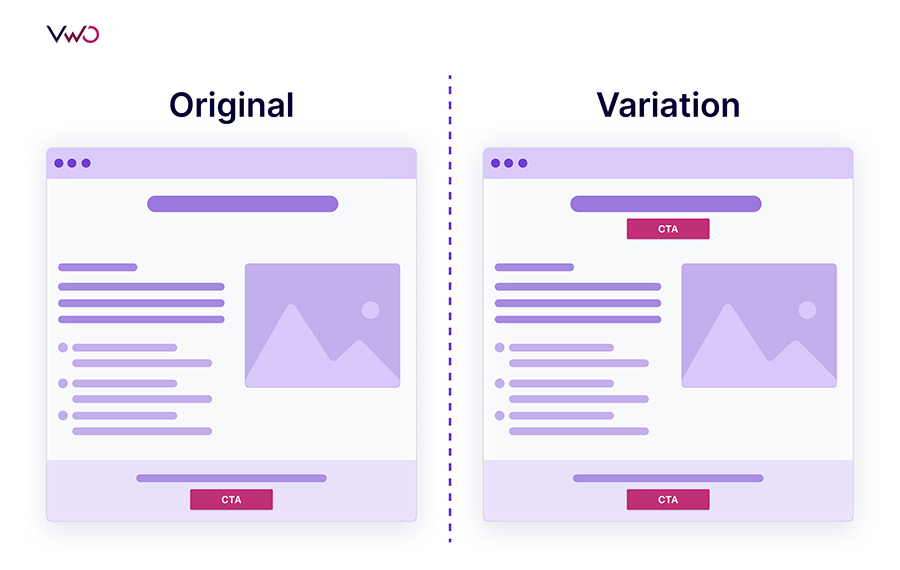

Epsilon found that 80% of consumers are likely to open their wallets if a brand serves up personalized experiences. So, even though landing page optimization is still about converting visitors into leads or customers. But you can just A/B testbutton colors (though that still has its place).

Personalization is taking LPO to the next level. It allows your landing page to:

- Predict what each visitor wants based on their behavior and data

- Serve up content that addresses their specific pain points

- Adjust in real-time based on visitor interactions

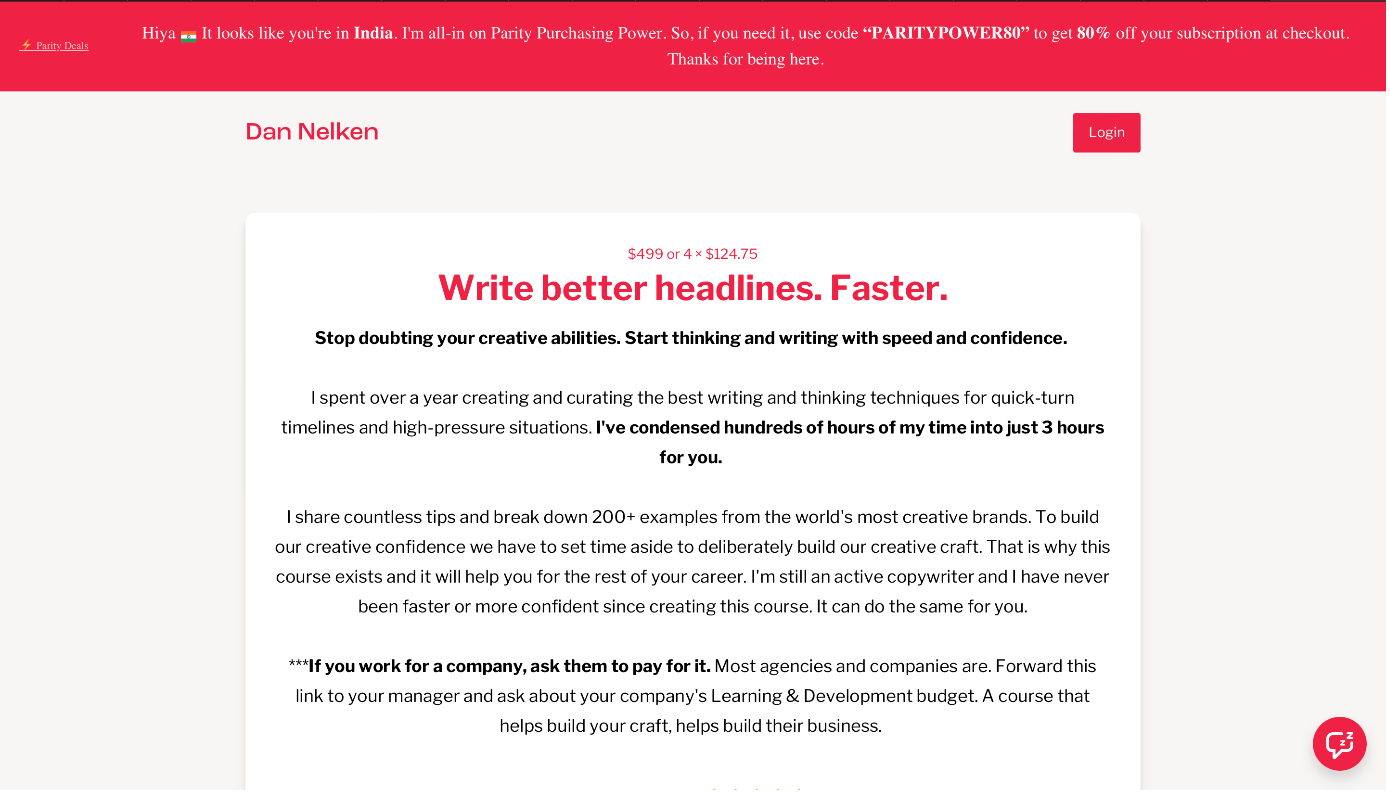

Consider this landing page optimization example:

Creators are generating more revenue by offering country-specific pricing options.

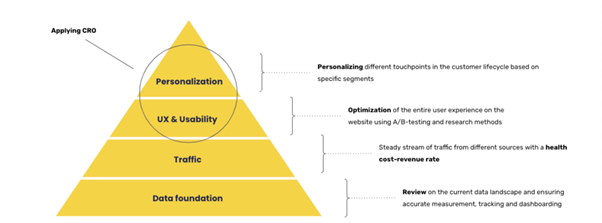

Building blocks for personalized landing page optimization

So, how do you implement personalization to produce optimized landing pages? Let’s break down the essential components:

- Data infrastructure

Your personalization engine runs on data. Build a solid foundation with:

- First-party data collection: Use progressive profiling, behavioral tracking, and direct feedback.

- Data integration: Combine CRM, web analytics, social media, purchase history, and support data.

- Consider a Customer Data Platform (CDP) to create comprehensive visitor profiles.

- Content strategy

Personalization demands variety. Develop a scalable content approach:

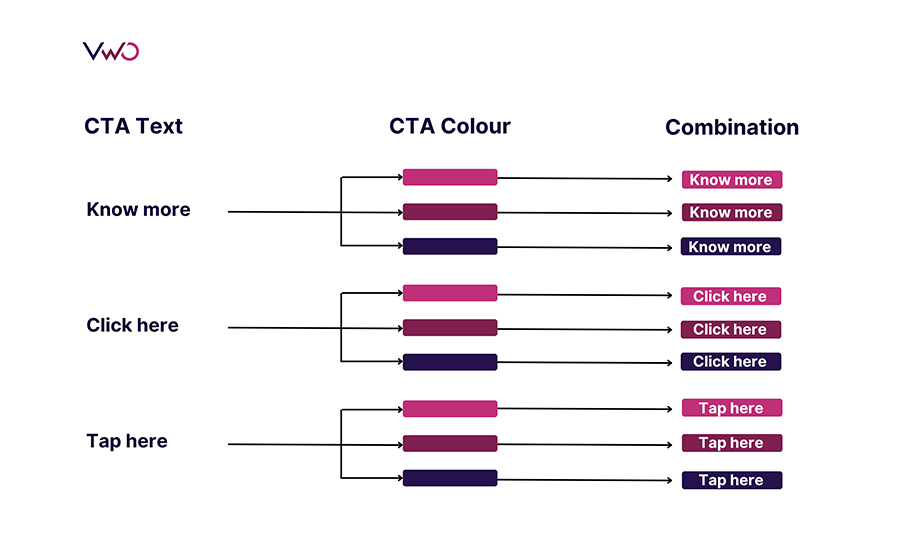

- Dynamic content generation: Use tools to create variations of core messages, including personalized descriptions and CTAs.

- Modular content: Break content into reusable pieces for easy mix-and-match personalization.

- AI and machine learning

Harness AI to bring data and content together:

- Predictive analytics: Forecast user behavior to serve relevant content.

- Real-time decision-making: Employ systems that quickly choose content for each visitor and learn from interactions.

Continually optimize your approach based on performance data and evolving user needs.

Landing page optimization tips for 2025

Here are five tips you should consider to optimize landing page for lead generation.

1. Dynamic content adjustment

Adapt your landing page to each visitor’s behavior in real-time.

-

- Adjust page elements based on user actions

- Emphasize value propositions for price-sensitive visitors

- Showcase features for capability-focused users

2. Personalized video content

Vidyard reports that 88% of consumers want to see more video content. Leverage video content:

-

- Create customized video greetings using visitor data

- Use dynamic overlays for personalized offers

- A/B test video styles and lengths for different segments

3. Search-optimized landing pages

This approach improves both search rankings and visitor relevance:

-

- Adjust content to match search intent

- Create personalized meta descriptions

- Use schema markup for better search engine understanding

4. Conversational chatbots

According to Drift, 55% of businesses using chatbots effectively generate more high-quality leads.

-

- Use AI-powered chatbots for visitor guidance

- Employ natural language processing for better interactions

- Personalize chatbot responses based on user data to add human touch

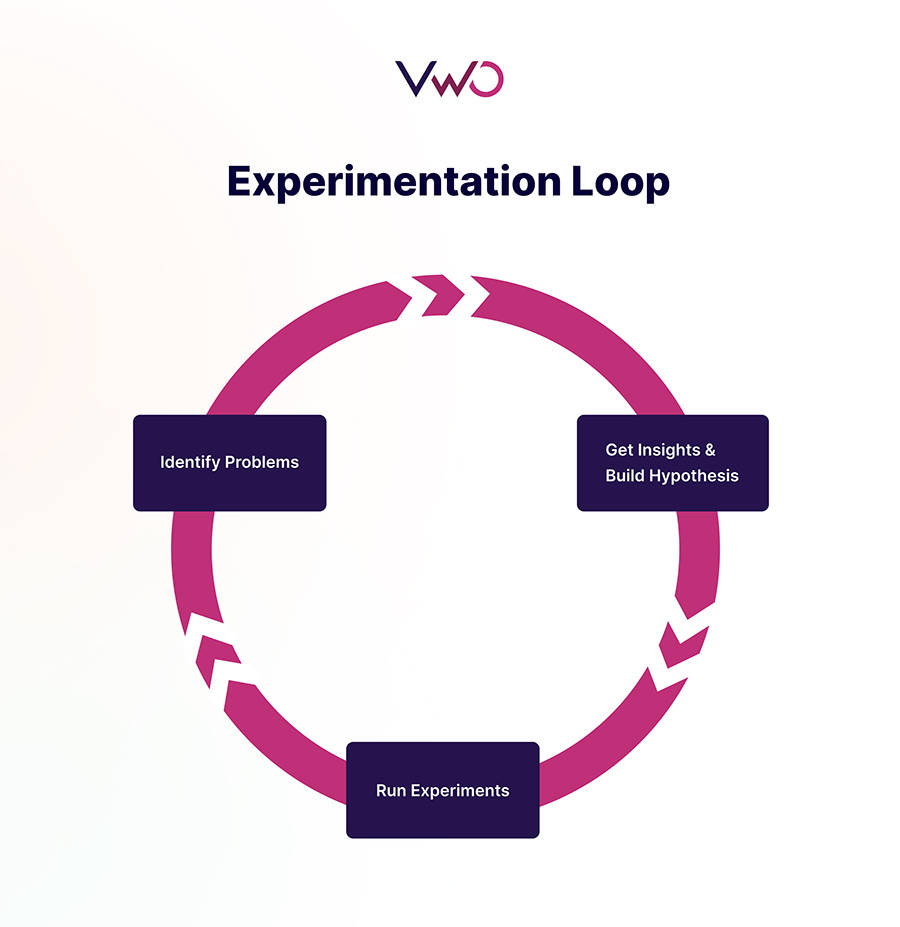

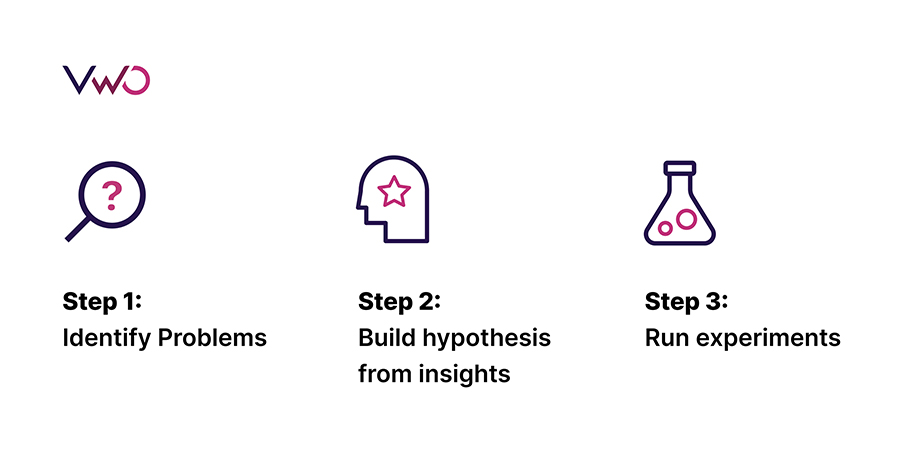

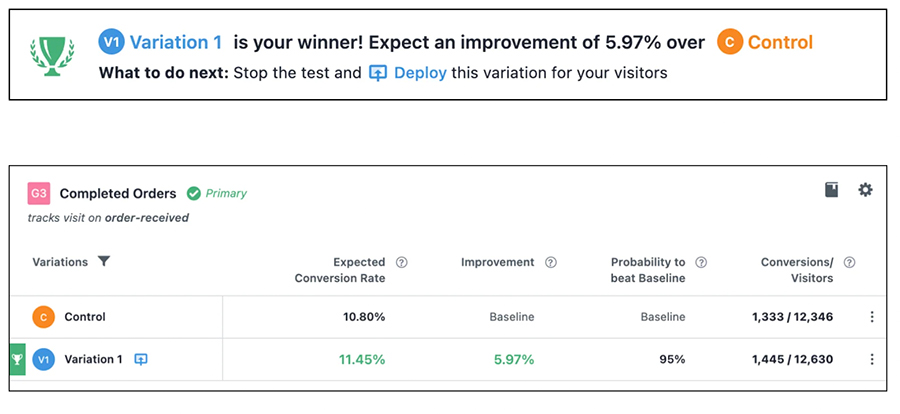

5. Advanced experimentation

Go for more nuanced, audience-specific optimization.

-

- Conduct multi-variate tests across page elements

- Analyze results at a granular level for different segments

Landing page optimization examples

Understanding your site audience is helpful before diving into optimization.

Site visitors typically fall into three categories:

- Ready to buy: These visitors are primed for conversion and need a clear, frictionless path to purchase.

- Will never buy: While not your target, their behavior can provide valuable insights into what doesn’t work.

- Interested, but need convincing: This group represents your greatest opportunity for optimization efforts.

Here are a few examples of how you can do so.

Example 1

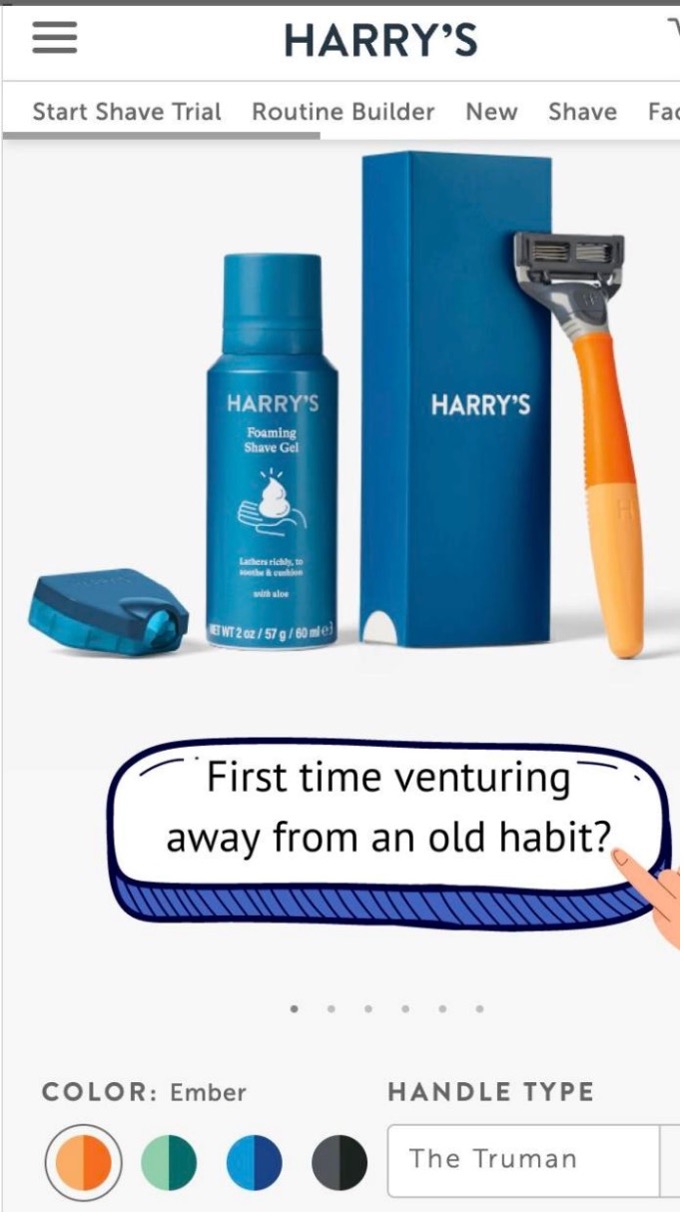

if you are a newcomer (like Harry’s) competing against a well-entrenched competitor who owns 50% of the market (Gillette), you can try a compelling CTA for your product page.

Example 2

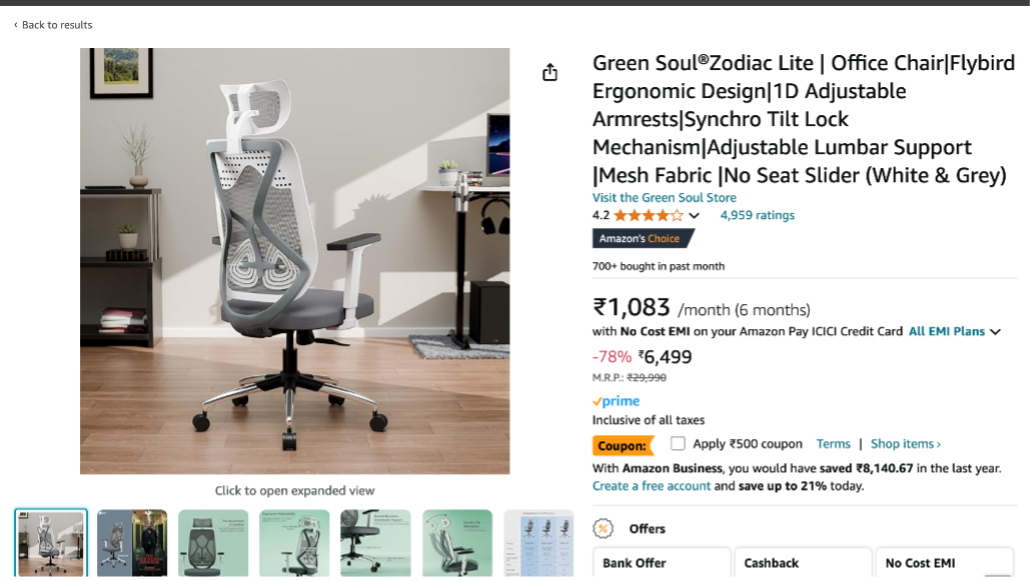

Effective marketing often favors clarity over creativity. Consider this headline:

- Large font size ensures readability.

- The key message is in the headline – the most-read element.

It may not win awards, but it’s likely to drive more sales.

Example 3

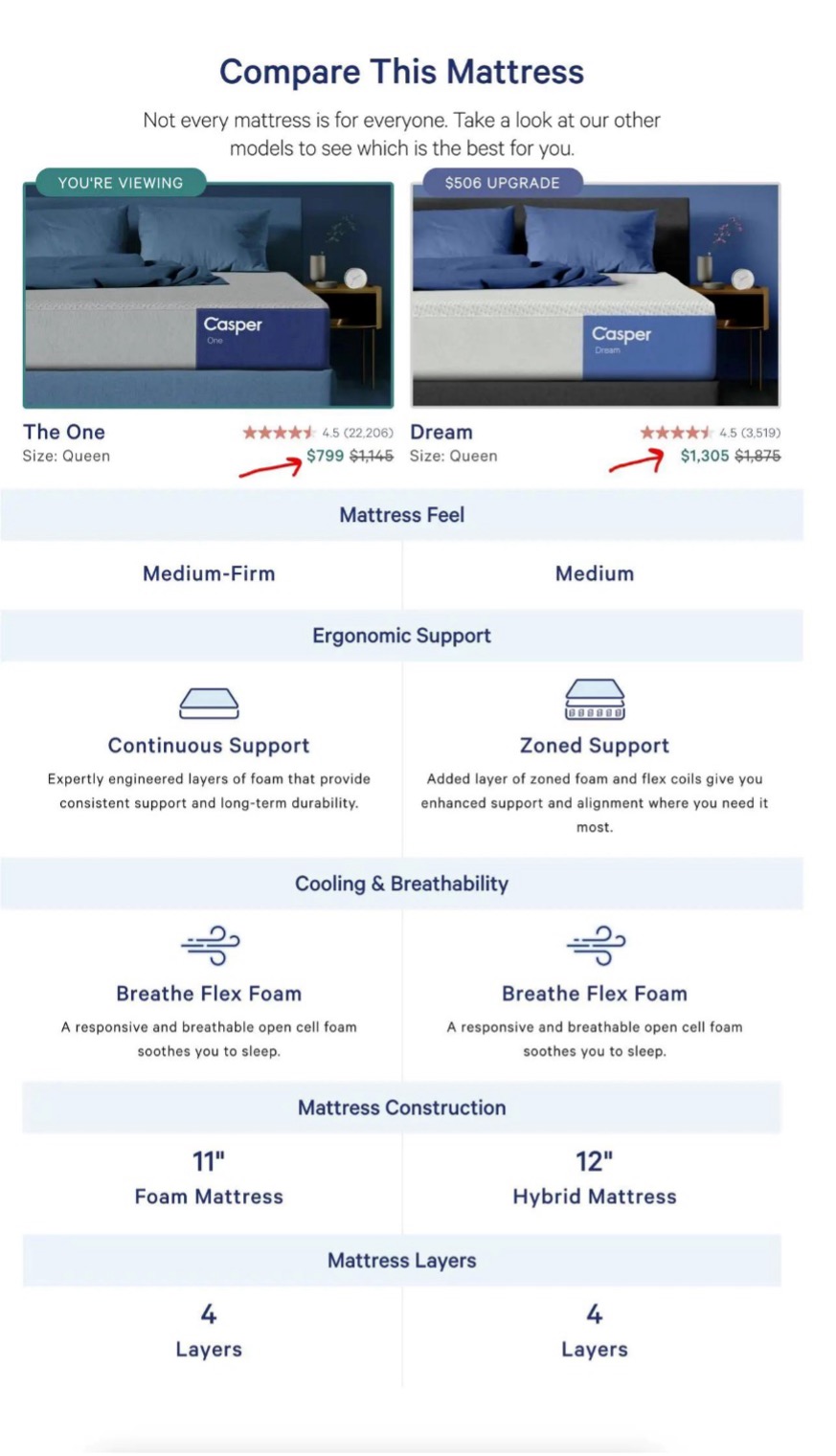

Very few visitors enter your site intending to buy.

Most enter to check things out quickly and from an arm’s length.

If the marketer can’t change this “quickly” and “arm’s length” mindset, there’s no hope of a sale happening.

Casper Mattress page has a comparison table that compares the mattress you’re looking at with Caspers’ higher-end model.

Landing page optimization tools

To implement the above tips, consider the following tools:

- Customer data platforms (CDPs): CDPs unify customer data from various sources. Top options include Segment and BlueConic.

- Personalization platforms : These create personalized experiences across digital properties. Consider Optimizely or Dynamic Yield.

- Analytics and attribution tools: Measure the impact of your personalization efforts with tools like Google Analytics 4 or Mixpanel. Look for predictive analytics and multi-touch attribution capabilities.

- Content Management Systems (CMS): Modern CMS platforms often include built-in personalization. Options include Optimizely CMS and Contentful (with extensions).

- Heatmapping and other optimization tools: For heatmapping consider Hotjar or Crazy Egg. For optimizing page speed, consider GTmetrix or PageSpeed Insights.

Landing page optimization: What’s next?

Soon, AI will be able to analyze hundreds of data points in real time to create truly unique experiences for each visitor. They will not just predict what a user might do next but will forecast entire customer journeys, allowing you to optimize for lifetime value from the very first interaction.

Imagine landing pages that can read and respond to your visitors’ emotions. Frustrated user? Your page could automatically simplify its layout. Excited prospect? It might dial up the enthusiasm in its messaging.

For that to happen, you need to foster a data-driven culture and develop clear guidelines for ethical AI use in marketing. Plus, build flexible, integration-ready tech stacks.

A well-optimized landing page not only aligns with your overall marketing strategy but also gets your visitors converting faster than you can say “CTA”.

But before you bounce (unlike your soon-to-be-optimized landing page visitors), let’s wrap this up.

Optimizing your landing page isn’t just about making it pretty. It’s about:

- Nailing your value proposition

- Adding value instead of slashing prices

- Testing, iterating, optimizing

- Being transparent about your pricing

- Helping customers make the best choice

- Avoiding manipulative tactics

- Regularly analyzing user behavior

Working with an optimization platform like Optimizely allows your team to be more data-driven, user-focused, and results-oriented. It fuels creativity, efficiency, and most importantly, conversions.

Want to learn more, here are the resources we recommend:

- AI playbook for marketers

- Guide to creating a bada$$ website strategy

- How digital leaders are thinking about personalization

About the author: Anubhav Verma is Associate Content Marketing Manager at Optimizely, one of this year’s edition of Experimentation Heroes.

About the author: Anubhav Verma is Associate Content Marketing Manager at Optimizely, one of this year’s edition of Experimentation Heroes.

Katie Leask is Global Head of Content bij Contentsquare, sponsor of the DDMA Dutch CRO Awards 2022. During the award ceremony on November 3 in B. Amsterdam, we will the crown the very best CRO cases the Dutch marketing industry has to offer. Do you want to attend? Get your tickets at:

Katie Leask is Global Head of Content bij Contentsquare, sponsor of the DDMA Dutch CRO Awards 2022. During the award ceremony on November 3 in B. Amsterdam, we will the crown the very best CRO cases the Dutch marketing industry has to offer. Do you want to attend? Get your tickets at: